For the application of the SDN switch in the above scenarios, a sentence is used to summarize and be flexible. The SDN switch mentioned here is not necessarily an OpenFlow switch. More often, a Cloud Agent is introduced in the traditional switch. Providing an open API (JSON RPC or REST API) may be a better way to achieve grounding.

The technology of SDN has been developed for several years, and the history of cloud computing is longer. The combination of the two is a killer application of SDN. It has been hot in the past two years. Some well-known consulting companies have increased the market of SDN year by year. The assertion of share mainly refers to the application of SDN in cloud computing networks.

Regarding the application of SDN in cloud computing networks, there are currently two main genres, one is the "soft" party represented by VMware, and the other is the "hard" party represented by Cisco. The former mainly means that the core logic of the entire network virtualization solution is implemented on the hypervisor in the server. The physical network is only a pipeline; while the latter refers to the core logic of network virtualization implemented in the physical network (the main edge of the machine) The top switch, or TOR, is only placed on the server or other dedicated devices if the switch cannot be implemented. These two programs have their own merits and each has its own fans.

But the world has never been unipolar, nor bipolar, but multipolar. There are many unconventional needs in the real network. These requirements cannot be solved by these two schemes, or even if they can solve them. , but not optimal, including implementation difficulty, performance and price. As a long-term use of hardware SDN to provide solutions for users, I would like to introduce how real-world hardware SDN switches can solve the specific scenarios in some cloud computing networks, whether public or private. It is likely that private clouds (including hosted clouds) will be encountered, as customized requirements are more common in private clouds.

It should be specially stated that these scenarios can be done with Cisco's ACI, because in essence ACI's idea is to use hardware SDN to support network virtualization. However, because many users do not want to use Cisco ACI for various reasons (such as price is too expensive, vendor lock-in, localization trend, etc.), they need another solution (I am not saying that ACI is not good, on the contrary, purely from a technical point of view) I personally appreciate ACI).

Customization requirements for SDN controllers and switches in cloud computing networksMany people have some misunderstandings about the application of SDN switches in cloud computing networks. There are two most typical misunderstandings. One is that someone always asks which controller is the controller you use? Can I dock with OpenDayLight/Ryu/ONOS? The other is that you can support a cloud computing network scenario with any SDN switch, no matter which vendor's SDN switch. The reason for these two misunderstandings is that many people still don't understand that SDN means application-related customization, thinking that they can do cloud computing networks with a common thing. As a specific SDN scenario, the cloud computing network is usually designed specifically for the cloud computing scenario. The function is single, that is, the requirements of the cloud computing network are completed, and even the explicit controller may not be hidden. In the cloud platform (such as directly implementing the code logic in OpenStack Neutron Server). The controller in this scenario cannot be used as a general-purpose SDN controller. Conversely, the universal SDN controller cannot be directly used in a cloud computing network scenario. As for why the second question is misunderstood, it is easy to understand, even the controller needs to be customized for cloud computing scenarios, not to mention SDN switches. Therefore, it is not a random SDN switch that can support cloud computing network scenarios, but requires special deep customization. For example, our Shengke network has designed the corresponding controller and switch functions specifically for this scenario.

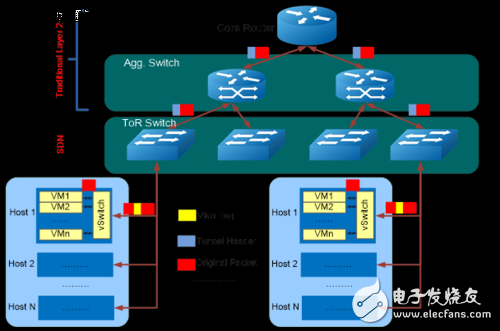

Scenario 1: Using hardware SDN switches to improve performanceIn this scenario, the user deploys network virtualization using Tunnel Overlay. However, because the operation of the vSwitch to the tunnel (VxLAN or NvGRE) has a large impact on performance (low throughput, large delay, large jitter, the specific impact depends on the implementation and optimization of each company), so this The SDN TOR switch can be used to perform the tunnel offload, and the tunnel operation with a large impact on performance can be offloaded to the SDN TOR switch. All other operations remain unchanged in the server. Logically, the SDN TOR switch can be considered as an extension of the vSwitch. If you go further, you can put the distributed things to the L3 Gateway on the SDN TOR, so that SDN TOR is equal to deep participation in network virtualization.

Not all users recognize this model, but some people like it. At present, we have deployed this scenario in several small and medium-sized private clouds and a famous IDC cloud. The biggest help for these clouds is excellent performance and stability. The data flow is shown in the figure below.

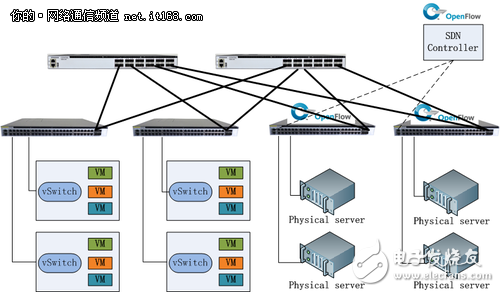

In the understanding of many people, all the servers in the cloud computing data center are virtualized. In fact, this understanding is far from the truth. Not only in many public and private clouds, there are a large number of physical servers, and some even exist. The physical servers in the cloud also account for the bulk. The vast majority of the clouds I have come across really have a lot of customer practices, and basically have this need. There are also many reasons. Some existing servers have no virtualization capabilities. Some customers have to run some very resource-intensive applications. The performance of virtual machines is too poor or the performance is unpredictable. Some of the clients' servers are customized. Servers, some for security reasons, do not want to share with others physically, and others are users with their own servers, do not want cloud service providers to move, etc., the overall reason is strange, but all customers are real demand.

For this demand, if you use Vlan networking, it is still relatively easy to get, you can barely use SDN switches, because to do isolation, directly configure Vlan on the ordinary switch. But once the tunnel is used, the problem comes. Where is the Tunnel VTEP configuration? Some people say that you can only have one virtual machine on the server, and then install the vSwitch, which can of course be done, but the performance is impaired, not the customer's hope, which is equivalent to deceiving the customer; others say that a special vSwitch is specially designed and installed. On the server, this is certainly true in theory, but the workload is large (not only the workload of designing this vSwitch, but also the workload of cloud platform control), and most people are uncertain. What's more, if the user's own device does not want you to move, these two methods will not work. For this scenario, many professional network virtualization solution providers, including VMware, generally use a hardware SDN switch as the VTEP Gateway to connect these physical servers to the virtual network. The physical server does not. Need to do anything. Moreover, this scenario has a more important requirement for the SDN switch as a VTEP Gateway. It is not available to all switches currently using a large-scale switch chip, that is, the switch needs to support both tunnel bridging and Support for Tunnel RouTIng (otherwise it can't be a distributed L3 Gateway). The switch that currently uses the big chip can only support the former and cannot support the latter. Cisco's ACI can support the latter because they use their own chip. Of course, the chip behind the chip provider is said to solve this problem.

Shengke Networks' SDN switches use self-developed switch chips and support Tunnel bridging & rouTIng from the first generation of chips. Currently, SDN switches for this scenario have been deployed in large numbers and will be deployed in multiple public clouds. The scenario architecture is shown in the following figure (Note: The SDN control protocol may not be OpenFlow or a proprietary protocol)

Hardware firewalls are used in cloud computing networks, which is common. In particular, corporate private clouds, hosted clouds, and even public clouds are also available. Many users have made it clear that I used my hardware firewall very well. You have to let me go to the cloud, I must use my hardware firewall. That problem came. In the traditional network, user data wants to go through the firewall. It is very simple. Connect the firewall to the network exit or configure an ACL to direct the flow. However, in a cloud computing network, it is possible that a firewall is only serving a certain number of users or a group of applications. Even this firewall is built by the user. You cannot physically connect it to the network outlet. Traffic must be directed to a firewall on a cabinet, but traditional ACLs are not appropriate at this time because VMs are dynamically generated and policies may change dynamically. You need to dynamically configure ACLs on the switch. What is the best way to do it? There is no doubt that SDN switches, dynamic strategy to follow, is the strength of SDN, the core of Cisco's ACI is dynamic strategy to follow.

If the tunnel is used in the cloud computing network, the problem will be more troublesome. Because many hardware firewalls do not support the tunnel, there must be another place to terminate the tunnel, and then convert the tunnel to Vlan to the firewall. Who is the most appropriate to do this? There is no doubt that it is the SDN switch that supports the tunnel.

Some people say that firewalls will still be limited by 4K Vlan. In fact, because the tunnel is converted to Vlan, the Vlan here can be unique for each port, and it does not need to be globally unique. Of course, this also requires the switch to support. Shengke's SDN switch can support this demand well.

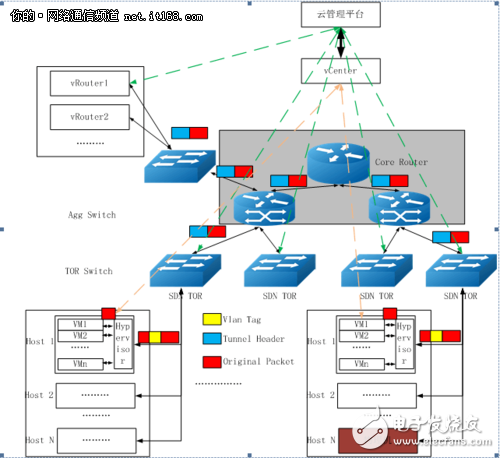

Scenario 4: Supporting multiple Hypervisor hybrid networking using hardware SDN switchesSaid to be multiple hypervisors, in fact, the most is still the hybrid network of VMware and other hypervisors. Because either KVM or Xen, open source cloud platforms or third-party neutral private cloud platforms can support them well, and the cloud platform can fully control these hypervisors. But VMware is a closed source hypervisor, and there is no way to control it as you wish. Many customers have used VMware's old products. Now VPC is hot, whether it is fashionable or really demanding, they all want to support VPC, especially the VPC based on Tunnel Overlay. Some people say that it is easy to do, isn't VMware having NSX specifically to do this? Although it provides drivers for OpenStack, NSX is very expensive and can't be used by customers or feels uneconomical. These customers want to introduce some open source KVM, XEN, but do not want to discard the previous VMware, but also want these Hypervisors together to form a VPC network. then what should we do?

An effective solution is to use an SDN switch to access a server that uses VMware. The cloud platform calls VMware's interface to configure VMware, uses Vlan to identify the tenant's network, and then converts Vlan to a tunnel on the SDN switch. Traffic is sent to the firewall for filtering, and it can also be done through the SDN switch. The solution has been successfully deployed by one of our industry cloud service provider partners in its industry customers, which have used VMware products extensively. Moreover, we found that there are many private clouds with similar needs. To put it bluntly, we don't want to spend money on NSX, but we want to have some NSX features. The architecture of the program is shown in the figure below.

This scene is not just a need, some customers don't care, but some customers care. In many small private clouds, Vlan is also used in networking mode. After all, it is easy to deploy and has good performance. However, with Vlan networking, in addition to the scalability is not as good as Tunnel Overlay, it has another small problem, because the VM can be easily moved, and each VM is tied to a specific Vlan. When the VM migrates, Vlan also needs Follow the migration. In the Vlan networking solution, Vlan must be visible to the intermediate physical network, which means that the Vlan configuration on the switch port should be dynamically changed. In order to circumvent this problem, it is now common practice to pre-configure all possible vlans on all ports of all switches. The problem with this is that all broadcast (such as ARP/DHCP), multicast, and unknown unicast packets are sent to all servers on the entire physical network, and are finally discarded in the server. The aspect is wasting bandwidth, and on the other hand there are potential security issues.

A very simple solution to this problem is to introduce an SDN switch to dynamically configure Vlan as needed.

Circular Connectors

Circular connectors, also called [circular interconnects," are cylindrical, multi-pin electrical connectors. These devices contain contacts that transmit both data and power. Cannon (now ITT Tech Solutions) introduced circular connectors in the 1930s for applications in military aircraft manufacturing. Today, you can find these connectors in medical devices and other environments where reliability is essential.

Circular Connectors is designed with a circular interface and housing to quickly and easily connect and disconnect signal, power, and optical circuits. Circular connectors are often preferred in military, aerospace, and industrial applications: these can be connected and disconnected without the use of coupling tools such as torque wrenches. Antenk`s circular connectors offer rugged solutions that have been engineered for reliable performance in a wide variety of harsh environment applications.

I/O connectors provide secure electrical contact and smooth, safe disconnect. They are used across a range of industries for communications devices, business equipment and computers. Top considerations when purchasing I/O connectors include pinout, gender, voltage rating, contact plating, and termination style.

Types of Circular Connectors

Circular connectors typically feature a plastic or metal shell surrounding the contacts, which are embedded in insulating material to maintain their alignment. These terminals usually pair with a cable, and this construction makes them especially resistant to environmental interference and accidental decoupling.

Circular I/O Connectors Types

Audio Connector

BNC/TNC Connector

DC POWER Connector

Mini din connector

DIN Connector

M5/M8/M12/M16/M23 PP9 Connector

SMA/SMB/FME Connector

RCA Connector

MCX MMCX Connector

Power Connector,Circular I Connectors,Circular O Connectors,Circular Power Connector,SMA/SMB/FME Connector,RCA Connector,MCX MMCX Connector,and we are specialize in Circular O Connectors,Circular Power Connector

ShenZhen Antenk Electronics Co,Ltd , https://www.antenkcon.com