After Apple launched the iPhone X with Face ID, discussions about 3D sensing have increased. Here, we are conducting an in-depth professional analysis of this industry.

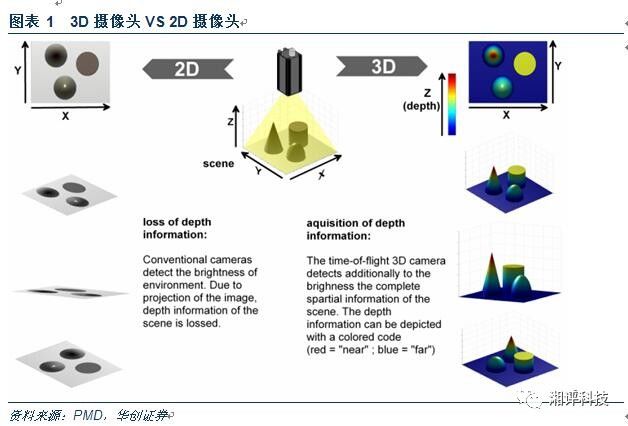

What is a 3D camera? A 3D camera is characterized by its ability to capture not only a 2D image but also depth information of the subject, which includes three-dimensional position and size data. This is typically achieved through a combination of multiple cameras and depth sensors. The 3D camera enables real-time 3D information collection, adds object sensing capabilities to consumer electronics, and introduces various "painful application scenarios" such as human-computer interaction, face recognition, 3D modeling, AR, security, and assisted driving. Looking at the current situation, we believe that the transition from 2D to 3D cameras will be the fourth revolution after black and white to color, low resolution to high resolution, and static images to motion pictures. It is expected to trigger another explosion in the consumer electronics supply chain! In short, touchscreens implemented interaction from one dimension to the plane, while 3D cameras will change the interaction mode from plane to solid.

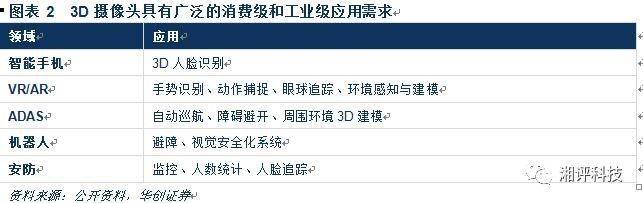

From the perspective of consumer experience, what disruptive applications can be achieved? The 3D camera captures real-time depth information, three-dimensional size, and spatial information of environmental objects, providing fundamental technical support for "painful point" application scenarios such as motion capture, 3D modeling, VR/AR, indoor navigation, and positioning. Therefore, it has extensive consumption level and industrial application requirements. From the application perspective, the current scenes where 3D cameras can demonstrate their potential mainly include motion capture recognition in the field of consumer electronics, face recognition, 3D modeling in the field of autonomous driving, cruising and obstacle avoidance, parts scanning and sorting in industrial automation, monitoring in the security field, and statistics, among others.

We believe that with the introduction of 3D camera technology by major customers this year, face recognition and gesture recognition applications will stand out first, and the market space is expected to see explosive growth! According to research firm Zion Research, the 3D camera market will grow from $1.25 billion in 2015 to $7.89 billion in 2021, with an average annual growth rate of 35%! From the current industry chain research, the unit price is expected to be 13-18 US dollars; according to the 20% penetration rate of 1.8 billion smartphones in 2021, it has exceeded 10 billion US dollars in market space, plus in AR, autonomous driving, robotics and other fields. The entire 3D camera market space is expected to exceed $20 billion!

(B) "Painful point type" application scenarios emerge one after another, ushering in the outbreak of mobile phone standard to smart terminal contention

1. Scene 1 - Face recognition comes in the first year, and fingerprint recognition stands out

Under the background of smart phones emphasizing differentiation and seeking innovation, face recognition is expected to become the next major innovation direction of consumer electronics and bring investment opportunities in the industry chain.

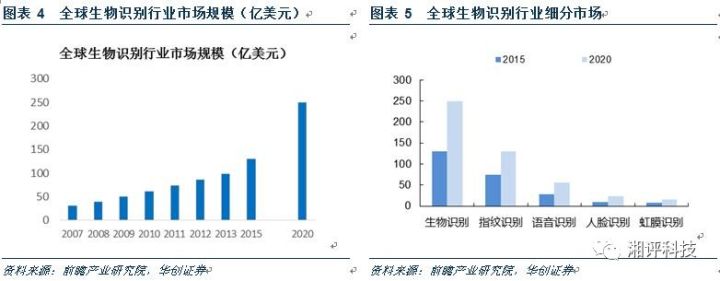

From the perspective of market share, the most likely to stand out after fingerprint recognition is face recognition. According to the China Foreground Industry Research Institute, the global market for biometrics grew at an average annual rate of 21.7% from 2007 to 2013. From 2015 to 2020, the market size of each segment is: fingerprint (73.3%), voice (100%), face (166.6%), iris (100%), and others (140%). Among the many biometric technologies, face recognition ranks first in terms of growth, and the market for face recognition technology is expected to rise to $2.4 billion by 2020. We expect the market size to be larger than expected in the case of smart terminals penetrating face recognition.

At present, the solutions of the face recognition market mainly include: 2D recognition, 3D recognition and thermal recognition. 2D face recognition is a method based on planar image recognition, but since the face of a person is not flat, 2D recognition has loss of feature information in the process of planarizing projection of 3D face information. 3D recognition uses a 3D face stereo modeling method to maximize the retention of valid information. Therefore, 3D face recognition technology is more reasonable and has higher precision.

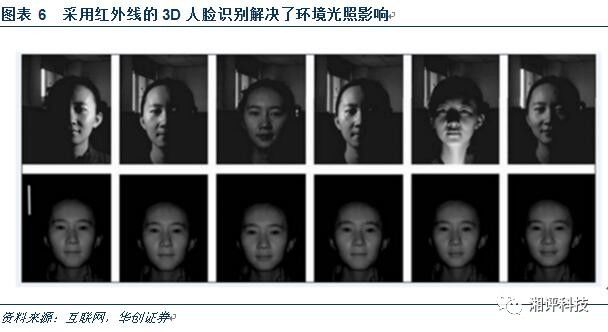

The 3D camera technology represented by TOF and structured light is the most suitable for the face recognition technology. First of all, the 3D camera uses infrared light as the light to emit light, which can solve the problem of ambient light effects of visible light. When the traditional 2D recognition technology changes the ambient light, the recognition effect will drop sharply and cannot meet the needs of the actual system. For example, the "yin and yang face" phenomenon that occurs when you encounter a sidelight when taking a picture may not be recognized correctly.

The TOF or structured light 3D camera technology captures the depth information of the face image when shooting, and can acquire more feature information to greatly improve the recognition accuracy based on the traditional face recognition technology. Compared with 2D face recognition system, 3D face recognition can collect depth feature information such as eye corner distance, nose point, nose point, distance between two temples, distance from ear to eye, and these parameters generally do not follow a person undergoes a large change in face-lifting and hair-dressing, so that 3D face recognition can continue to maintain a very high recognition accuracy when the user feature is issued.

2, scene 2 - gesture recognition: the core pain points of human-computer interaction

Reviewing the development of human-computer interaction is actually a process of constantly transforming the machine to liberate people. In the earliest computers, the keyboard was the only input device. With the appearance of the GUI of the graphical interface, a combination of “keyboard and mouse†was formed. However, precise mouse clicks and keyboard typing still require high learning costs. After that, the smaller the device terminal is, the more the user is liberated, and the appearance of the touch screen of the mobile phone really gets rid of the intermediate medium of the mouse and the mouse, and achieves the hit-through. The next decade of human-computer interaction will be more intelligent and convenient, freeing users from touching the screen, actively capturing user gestures and performing recognition processing will become the next interactive pain point!

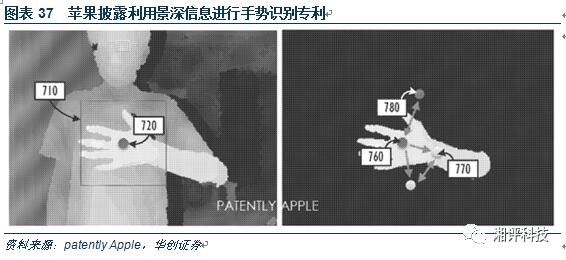

The key to gesture recognition lies in the 3D camera (or 3D perception) technology. The 3D camera uses TOF or structured light technology to obtain image depth information, and the user gesture is recognized by algorithm processing, thereby realizing the user to control the smart terminal. According to the MarketsandMarkets study, the market size of proximity sensors is expected to reach $3.7 billion in 2020 and a compound growth rate of 5.3% from 2015 to 2020.

3, scene 3 - 3D reconstruction of the basic technology, AR / VR field will shine

Why should AR/VR equipment adopt 3D camera technology? ——1. Obtain RBG data and depth data of surrounding environment images for 3D reconstruction; 2. Implement human-computer interaction methods such as gesture recognition and motion capture.

The 3D sensing of AR/VR generally adopts two active sensing technologies, TOF and structured light. The front of the device usually includes an infrared emitter, an infrared sensor (acquiring depth information) and multiple ambient light cameras. RBG information). Taking the TOF technology as an example, the infrared emitter emits infrared rays, and is reflected by the infrared sensor after being reflected by the target object, and the distance/depth data is obtained by calculating and converting the phase difference between the transmitted signal and the received signal.

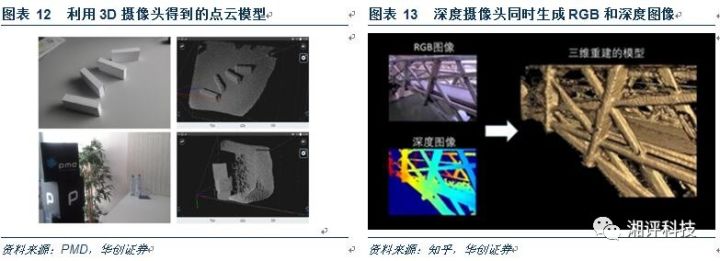

In the early stage, the three-dimensional model in the scene was reconstructed by two-dimensional images with different angles, and the realism was low. The appearance of the depth camera greatly improved the three-dimensional reconstruction effect. The depth camera can simultaneously acquire the RGB data and depth data of the image and perform 3D reconstruction based on this.

The following describes a three-dimensional reconstruction using a 3D camera through a simple scenario. A 3D camera based on TOF/structured light technology can be used to create a “point cloud†of the surrounding environment, as shown on the left, and different distances from the lens by different colors. The point cloud data combined with the RBG information of the environment image can be used to restore the scene as shown in the right figure, after which multiple applications such as ranging, virtual shopping, decoration, etc. can be derived, for example, the furniture placement in the right picture is performed. Since the restored scene has deep information, the simulated furniture cannot continue to push when it encounters obstacles, and it has super realism.

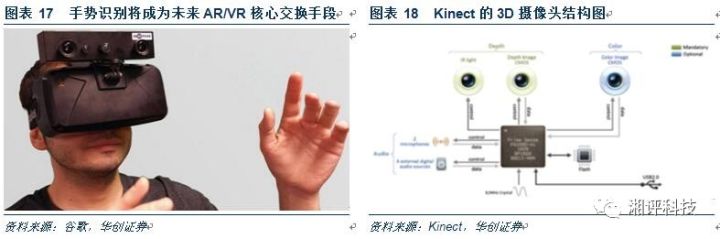

At the same time, the gesture recognition function provided by 3D camera technology will become the core interaction means in the future AR/VR field. At present, most VR devices introduced by major manufacturers require controllers. The advantage of game controllers is that control feedback is timely and combined. The disadvantage is that there is less interaction with the virtual environment, and the user can only control but not participate. In the AR application, the handle is completely incapable of the task of human-computer interaction. There is a wealth of human-computer interaction content in the AR application field, and this kind of interaction is very complicated, and only gesture operations can be completed. Taking HoloLens as an example, there is a set of four environment-aware cameras and a depth camera. The environment-aware camera is used for human brain tracking, and the depth camera is used to assist gesture recognition and perform three-dimensional reconstruction of the environment.

In addition to Hololens, AR products such as Meta2, HiAR Glasses and Epson Moverio have also adopted 3D sensing technology for gesture recognition and motion capture. We expect 3D cameras based on TOF or structured light technology to be used for gesture recognition and 3D. The basis of scene reconstruction will become the standard for AR equipment!

Second, 3D Sensing camera: a "premeditated" change iPhone8 introduction has been on the string!

(1) The technical route has matured: TOF and structural light

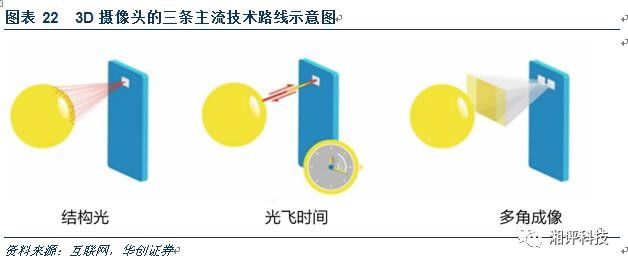

The 3D camera has three main technical routes: TOF (time of flight), structured light (structure light) and multi-angle imaging (also known as binocular stereo vision technology, multi-camera). From the current technological development and product application, TOF and structured light are the most promising due to their advantages of convenient use and low cost.

1. Time Of Flight technology

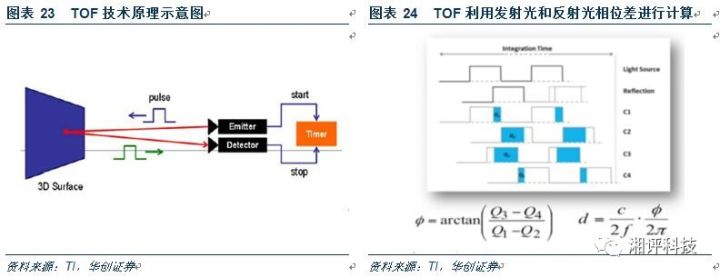

The TOF technology is to actively transmit the modulated continuous optical pulse signal to the target surface, and then use the sensor to receive the reflected light, and use the phase difference between them to calculate and convert the distance/depth data.

The advantage of TOF is that it can calculate the depth of pixel by pixel. The accuracy can be high in close range. The disadvantage is that the outdoor is affected by natural light and infrared rays, the measurement range is narrow (the distance cannot guarantee the progress), and the cost is more than the structural light. high.

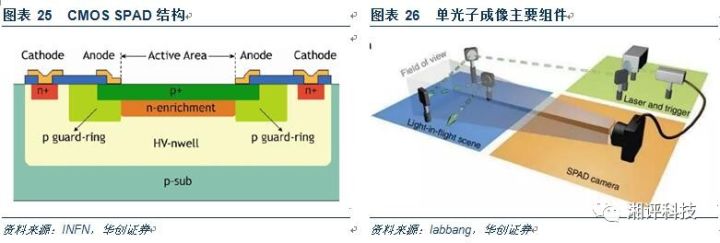

The current mainstream technology TOF technology uses a SPAD (single-photonavalanche diode) array to accurately detect and record the time and space information of photons, and then perform three-dimensional reconstruction of the scene through a three-dimensional reconstruction algorithm. SPAD is a kind of high sensitivity semiconductor photodetector, which is widely used in the field of low light signal detection.

2, structure light (structure light) technology

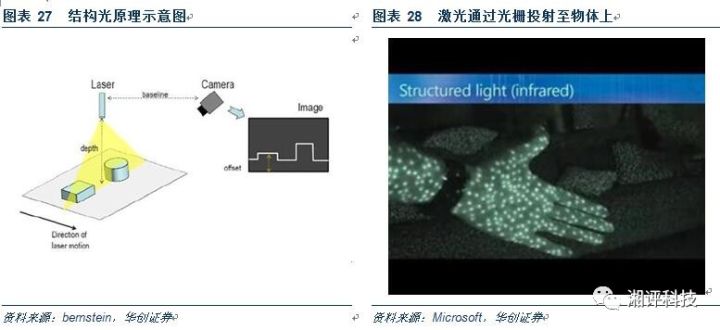

The basic principle of structured light technology is to place a grating outside the laser, and the laser will refract when it is projected through the grating, so that the laser will eventually shift at the falling point on the surface of the object. When the object is closer to the laser projector, the displacement caused by the refraction is smaller; when the object is farther away, the displacement caused by the refraction will correspondingly become larger. At this time, a camera is used to detect and collect the pattern projected onto the surface of the object. Through the displacement change of the pattern, the position and depth information of the object can be calculated by an algorithm, thereby restoring the entire three-dimensional space.

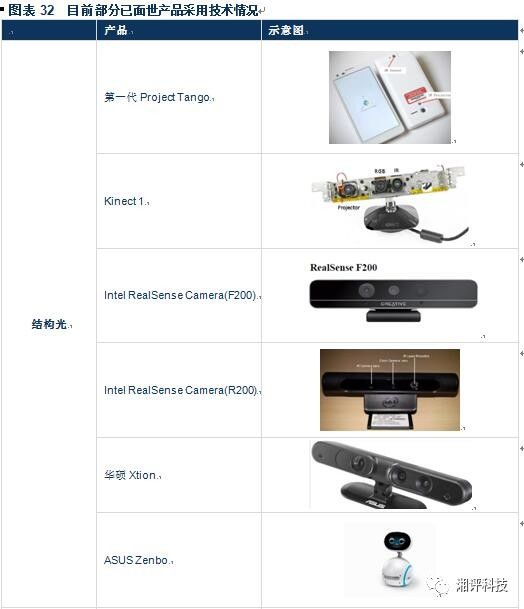

Representative products using structured light technology include Kinect 1, Intel RealSense Camera (F200 & R200) ​​and first-generation project tango products.

The advantage of structured light technology is that the depth information can be read in one imaging. The disadvantage is that the resolution is limited by the grating width and the wavelength of the light source, and the requirements for the diffractive optical device (DOE) are also high, and the infrared light of the visible light is also greatly affected.

3. Multi-Camera technology

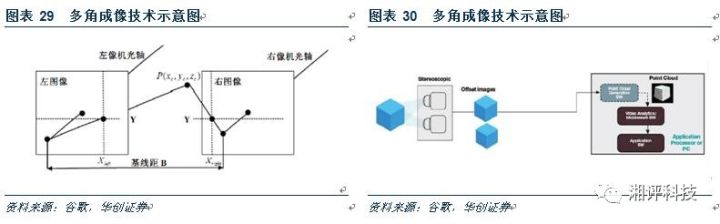

Multi-angle imaging technology is based on the parallax principle, and uses the imaging device to acquire two images of the measured object from different positions, and obtains the three-dimensional geometric information of the object by calculating the positional deviation between the corresponding points of the image.

The advantages of the multi-angle imaging technology are that both indoor and outdoor applications are not affected by sunlight and are hardly affected by the transparent barrier. The disadvantage is that the calculation amount is large, the algorithm is complicated, and the hardware has high requirements.

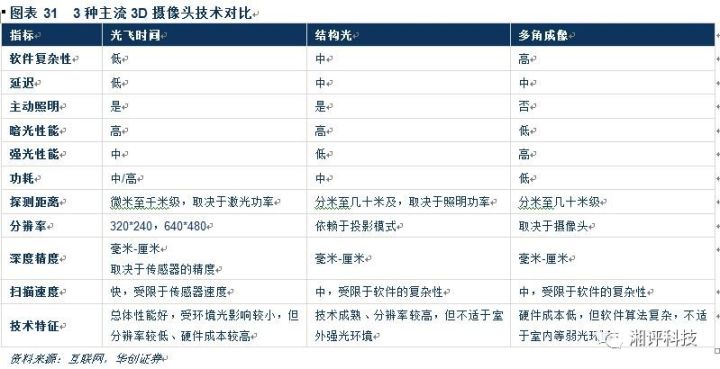

The following table compares three mainstream technologies from software complexity, latency, active illumination, detection distance, resolution, and more:

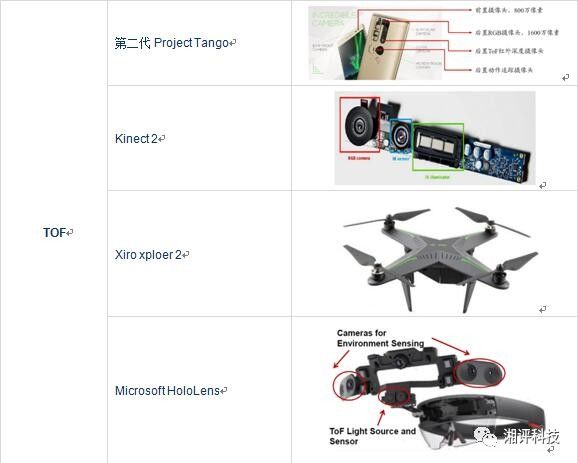

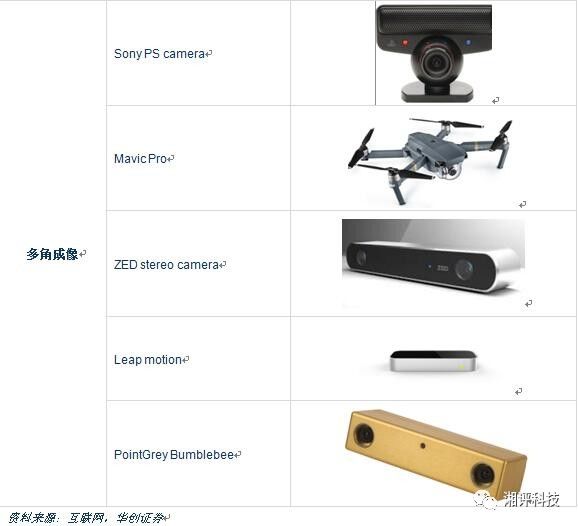

Judging from the application of the products already on the market, the application of structured light/TOF is mature, and the technical principle is the same. Most of the original products use structured light technology, and the number of TOF technologies in the new generation is gradually increasing. We believe that TOF technology will become the most promising future in terms of its advantages in software complexity, delay, precision, and scanning speed. 3D camera technology; while structured light has a good advantage in cost advantages, one-time imaging, etc., is expected to become the vanguard of mobile applications.

(2) The international consumer electronics manufacturers have mature 3D Sensing camera technology. Apple's accumulation is the deepest.

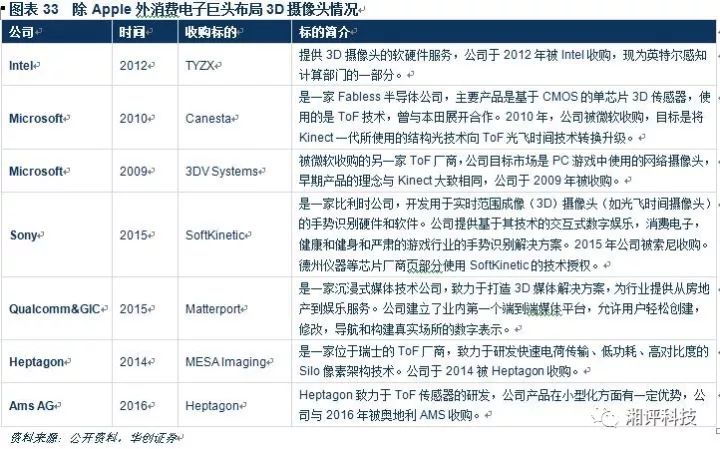

Since 2009, major consumer electronics giants have begun to deploy 3D camera fields, and there have been signs of acceleration in the past two years! In recent years, giants represented by Intel, Microsoft, Sony and Qualcomm have launched mergers and acquisitions in the fields of TOF 3D sensors, gesture recognition algorithms, and downstream application software solutions.

Apple has been in the 3D camera technology and its downstream applications for a long time, we expect the iPhone 10th anniversary model is expected to sacrifice this killer technology. Throughout the history of consumer electronics innovation, large end customers have the ability to cultivate emerging markets, lead innovation trends, and drive technological innovation in the industry. Once high-end manufacturers of other manufacturers are required to follow up quickly, the entire industry is expected to usher in explosive growth.

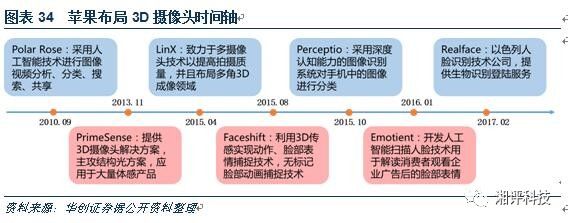

Apple first deployed in the 3D camera field in 2010, and has acquired a number of 3D imaging, face recognition and gesture recognition companies. In September 2010, the acquisition of Swedish algorithm company Polar Rose, the acquisition of Prime Sense in 2013, the acquisition of machine learning and image recognition company Perceptio in 2015 and the Israeli 3D camera technology company LinX, and the motion capture company Faceshift, Apple in 2016 Acquisition of face recognition system company Emotient.

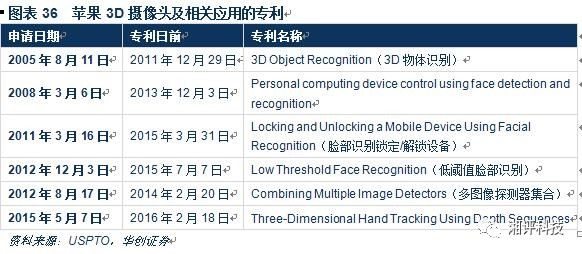

In terms of patents, Apple began patenting 3D camera technology and related applications since 2005, including gesture recognition using image depth information and face recognition using devices such as infrared sensors.

(3) The application of the iPhone in the distance sensor has been in full swing. The 3D Sensing camera is deployed in the tenth anniversary edition.

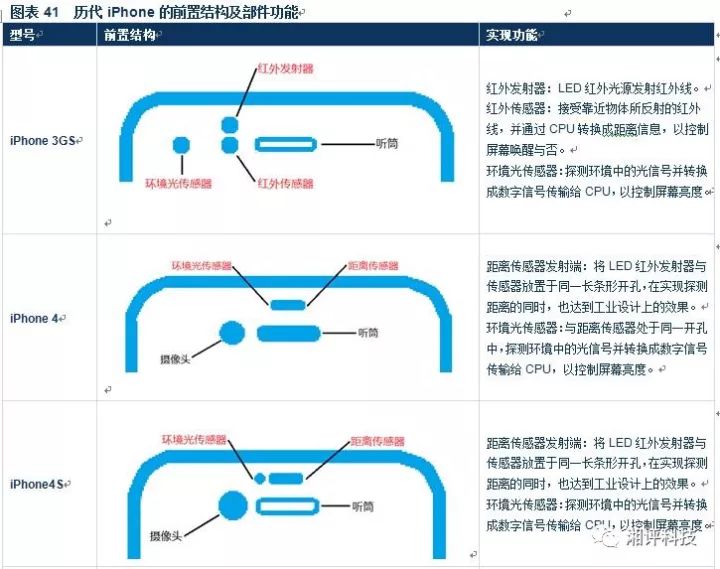

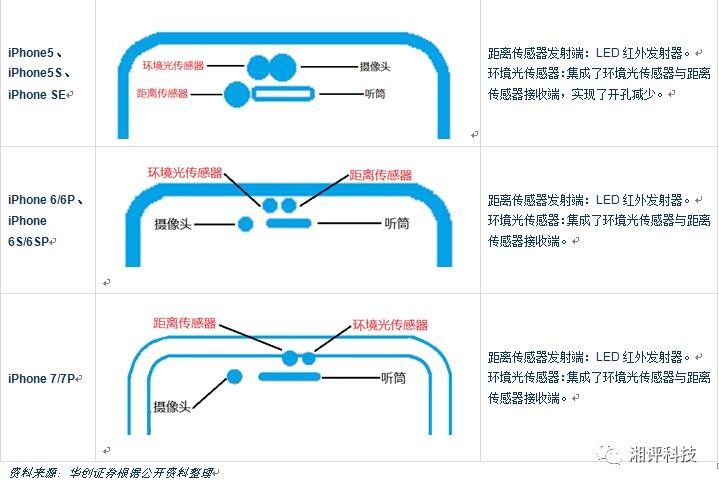

Compared with 3D Sensing's distance sensor application, which is similar in basic structure and basic principle, Apple has become a standard since the iPhone 3GS. Below we comb the innovations of the iPhone's pre-structure from the structure, principle and function.

Apple has a front-end distance sensor design from the original iPhone. It is mainly used to judge the distance between the user's head and the screen. When the phone is close to the ear, it can automatically close the screen. All previous iPhones have two front sensors (ambient light sensor + infrared distance sensor) and a front camera: from right to left are front camera, light sensor, infrared distance sensor, where the optical sensor is usually covered by ink and cannot be found.

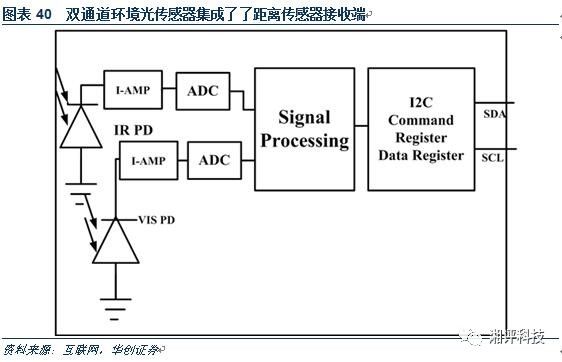

Distance sensor: use an infrared diode to emit infrared light. If there is an object close to it, it will reflect infrared light. The reflected infrared light is perceived by the infrared light detector, and the signal is transmitted to the CPU through some column logic control operations. The CPU can control whether the screen wakes up or not.

Ambient light sensor: It is mainly used to detect changes in optical signals in the environment and then convert their changes into digital signals for output to the CPU.

Theoretically, the distance sensor consists of two units, transmitting and receiving, but why is there only three holes in the front? This is because Apple adopts a method of integrating the ambient light sensor and the distance sensor receiving end. Two photodiodes are used in the distance sensor: one broadband photodiode detects the optical in the 300nm~1100nm band, and the other uses the narrowband filter material. The infrared light is detected, and then the infrared light is subtracted from the light received by the broadband photodiode to obtain an ambient light signal.

It can be seen that the front-end structure of the iPhone includes a front camera, a distance sensor and an ambient light sensor. The only innovation is whether to integrate the distance sensor receiving end with the ambient light sensor, and the function can only realize whether the user's head is close to the function. The handset controls the screen. We believe that the application of the distance sensor for 8 consecutive generations shows that Apple has designed and used the ranging module on the mobile phone.

We conclude that the introduction of a 10D camera technology based on structured light or TOF technology for a large customer's 10th anniversary model will be a high probability event, and the 3D camera module is likely to be more than one! This technology will bring 3D face recognition and gesture recognition to a new generation of products, opening a new wave of consumer electronics innovation trends!

Third, decrypt the 3D Sensing camera industry chain The biggest change lies in the IR VCSEL module (light source + optical components)

(1) Dismantling the 3D Sensing camera

3D camera introduces 3D sensing technology based on TOF or structured light on the basis of traditional camera. At present, these two mainstream 3D sensing technologies are active sensing. Therefore, the 3D camera industry chain mainly adds infrared light source + optical compared with the traditional camera industry chain. Components + infrared sensors and other parts.

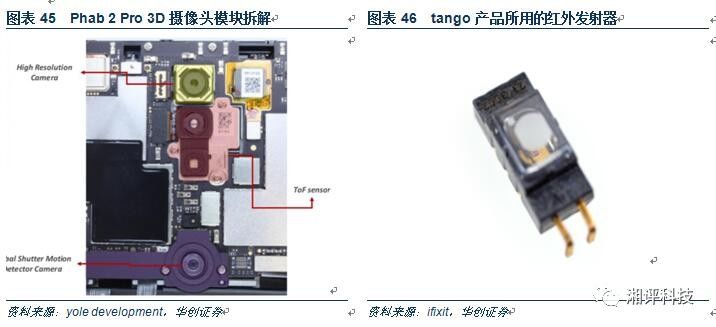

The following is a breakdown of the 3D camera industry chain with specific product disassembly. First, take the Lenovo Phab 2 Pro phone on the Google tango platform as an example. Tango is an augmented reality computing platform developed and authored by Google. It uses computer vision to enable mobile devices, such as smartphones and tablets, to detect their location relative to the world around them without the need for GPS or other external signals.

The back structure of the Lenovo Phab 2 Pro mobile phone on the Tango platform, from top to bottom, is the main camera, infrared sensor, infrared emitter, flash, motion tracking camera, fingerprint recognition module.

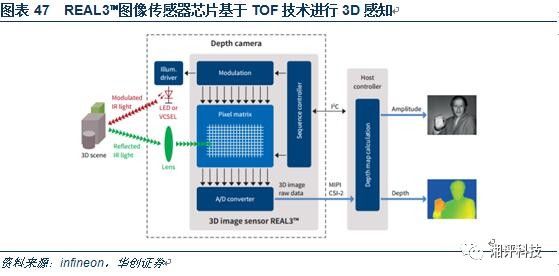

The Phab 2 Pro's 3D camera module uses the REAL3TM image sensor chip developed by Infineon and PMD tec. This chip combines analog and digital signal processing with high data rates. It integrates a pixel array, control circuitry, ADC, and digital high-speed interface on a single chip.

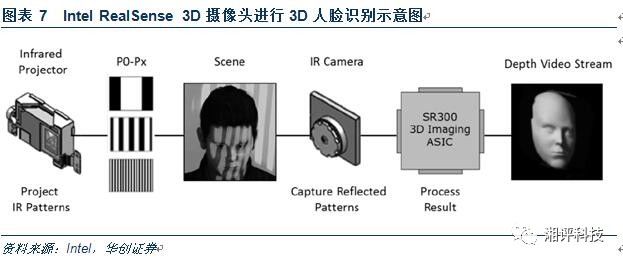

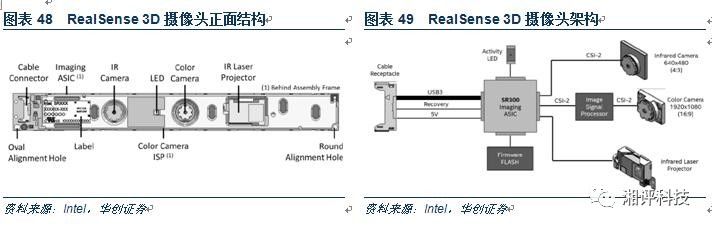

Let's take the example of RealSense 3D camera developed by Intel. This 3D camera product is based on structured light technology and is also an active sensing technology.

The RealSense 3D camera is mainly composed of an infrared camera, a normal camera, an infrared laser transmitter and a dedicated chip (SR300 ASIC): the laser emitter emits infrared light and is irradiated onto the surface of the object via a grating, and the camera detects the collection and projection onto the surface of the object. The pattern can be used to calculate the position and depth information of the object by the displacement of the pattern.

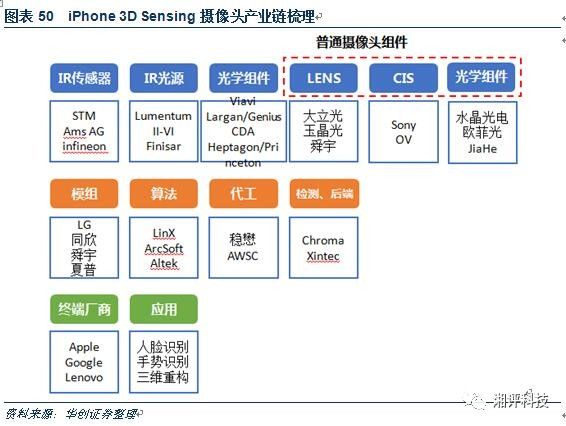

By disassembling and analyzing the mainstream 3D camera products already on the market, the 3D camera industry chain can be divided into:

1. Upstream: infrared sensor, infrared light source, optical component, optical lens and CMOS image sensor;

2. Midstream: sensor module, camera module, light source foundry, light source detection and image algorithm;

3. Downstream: terminal manufacturers and applications.

An Outdoor CPE (Customer Premises Equipment) is a client device used in outdoor environments, typically for wireless network connectivity. It is a device used to transmit Internet signals from a service provider to the user's location.

Outdoor CPE is commonly used to provide broadband access services, especially in remote areas or places without traditional wired network coverage. It can transmit an Internet connection via wireless signals to the building or area where the user is located. Outdoor CPE is typically a highly protected and durable device designed to handle harsh weather conditions in an outdoor environment.

Outdoor Cpes typically include the following main components:

1. Antenna: Outdoor CPE is usually equipped with a high-gain antenna for receiving and sending wireless signals. These antennas can be designed for different frequency bands and wireless standards.

2. Wireless module: Outdoor CPE usually includes a wireless module to handle the transmission and reception of wireless signals. This module usually supports different wireless standards such as Wi-Fi, LTE, 4G, etc.

3. Router function: Outdoor CPE usually has the function of a router, which can distribute the Internet connection to the device where the user is. It can provide IP address allocation, port forwarding, network security and other functions in the local area network.

4. Power supply and battery: Since Outdoor CPE is usually used in outdoor environments, it usually requires a reliable power supply. Some Outdoor Cpes are also equipped with batteries to provide continuous Internet connectivity in the event of a power outage.

The main features and advantages of Outdoor CPE are as follows:

1. High-speed broadband access: Outdoor CPE can provide high-speed broadband access services through wireless signals, so that users can enjoy high-speed Internet connections in places without traditional wired network coverage.

2. Flexibility: Since Outdoor CPE is a wireless connection, it can be used in different locations and environments. Users can place the Outdoor CPE in the best position as needed for optimal signal coverage and performance.

3. Simple installation: Outdoor CPE usually has a simple installation process, and users only need to place the device outdoors and make some basic Settings to start using the Internet connection.

4. Strong anti-interference ability: Outdoor CPE usually has strong anti-interference ability and can provide stable Internet connection under harsh environmental conditions. It can cope with various sources of interference, such as electromagnetic interference, weather conditions and so on.

5. High reliability: Outdoor CPE usually has a high degree of reliability and durability, and can be operated for a long time in a variety of outdoor environments. It usually has waterproof, dustproof, lightning-proof and other functions to cope with different weather conditions.

Outdoor CPE is widely used in a variety of scenarios, especially in rural areas, mountains, islands and other places without traditional cable network coverage. It can provide high-speed broadband access services to residents and enterprises in these areas, helping them to enjoy the convenience of the Internet.

In summary, the Outdoor CPE is a client device for outdoor environments to provide high-speed broadband access services via wireless signals. It is highly reliable, flexible and anti-jamming, and can provide a stable Internet connection in a variety of outdoor environments. It plays an important role in providing broadband access services, especially in places without traditional wired network coverage.

Outdoor Cpe,Router 4G Outdoor,4G Router Bridge Cpe,300Mbps Wifi Ap Outdoor 4G Lte Cpe

Shenzhen MovingComm Technology Co., Ltd. , https://www.movingcommtech.com